Autumn 1994

Design in the age of digital reproduction

‘Multimedia’ may well be one of the most overused words of the 1990s. What does it mean for designers, what has been achieved so far and where are we heading?

Multimedia is a troublesome word. When language is reinvented by marketing departments it tends to obscure as much as it enlightens, and in this case of multimedia, the meaning has been stood on its head. As an adjective to describe an event, multimedia makes sense; as a noun it is no longer appropriate, since what it now describes is the convergence of many forms – dismantled and reconstructed in the digital flux – within a single medium.

Multimedia – or more accurately the digital medium – is not a means of communication, but one of creation, distribution and consumption. In the digital domain you are a user where once you were a reader, a player where once you were a viewer. The distinction is that between participant and bystander, activity and passivity, production and consumption, the presumption being that ‘interactive’ digital media is there to serve and be responsive, as a tool. I say presumption because so much of what we call multimedia is devoid of reciprocity, restricting its operation to point-and-click, read and view. The CD-ROM publishers are living off repackaged versions of old forms: electronic books, interactive magazines, interactive cinema – old wine in new bottles.

Interactive and multimedia are by no means synonymous. Does interaction simply mean making choices and if so, is the act of choice creative in itself? Is the interactive machine a conveyance – a medium – or something of a higher order: a tool for production? And in relation to what is it interactive – a conversation, a book, a painting, a movie? We inevitably judge the new media within the context of our experience with traditional forms of art and literature and often fail to make the distinction between the form of the work and its conveyance: between the novel and the printed page, the painting and the canvas, the movie and celluloid. Clearly the conveyance is dumb, but the notion that these forms involve no dialogue between artist and viewer and that the act of consumption is passive is false. In fact, the dialogue is simply indirect; it takes place through a process of public and private discussion, works of critical theory and media attention.

It seems, then, that there is a rising scale of interactivity from viewing and reading at one end, through interrogation, play or exploration, to composition and construction. Digital media can take any form expressible in numerical or logical terms and conceivable by the human imagination: viewer, finder, player, simulator, composer, recorder, copier, communicator. The medium has no fixed formal quality of its own and is only definable as a broad electronic domain in which new genres, species and sub-species of art will emerge to add to traditional means of expression.

Interactivity is hard work

Little of this is apparent from the way digital media is marketed by the computer software and print media conglomerates, which to date have gone no further than the lower orders of interactivity: viewing, reading, play and interrogation, all of which are compatible with the notion of a leisure market. ‘Passive’ viewing and reading, incidentally, are referred to as ‘browsing’, implying that no work is being done. This confusing distinction between work and play is repeated in the way the computer industry categorises its products: those oriented to control, calculation and production are known as operating system, utility, tool, and application; those oriented towards play are almost all called games. But because interactive digital media collapses consumption and production, such distinctions become meaningless. Attempts to clear the confusion have resulted in clumsy neologisms such as ‘edutainment’ and the risible ‘content-based software’. Otherwise the distinction remains, and it is dulling the ambition of software developers.

Cultural inhibition is only one of many obstacles preventing new media products from realising their potential, but it will probably be the most difficult to overcome. What Roland Barthes called ‘the pitiless divorce which the literary institution maintains between the producer of the text and its user, between its owner and its customer, between its author and its reader’ is deeply rooted in our social fabric and not something which can be cured by the application of mere technology. For some years now it has been apparent that the digital domain will enable its occupiers to create and compose free of material and physical constraints; to communicate and reproduce infinitely words, images and sound; to create dynamic and animated syntheses of text, sound, still and moving images; to simulate worlds and to explore different points of view; and to create new narrative forms which reflect a post-Newtonian philosophy in which certainty is replaced by a set of probabilities and instruction gives way to auto-didactic experimentation. This is the grand theory, but empirical evidence is still thin on the ground.

Compared to print, the amount of digital media in circulation is negligible. The number of published CD-ROM products published is in the low thousands – as many books as are launched in a day. The vast majority mimic the book, just as early cars took their form from the carriage. And in most cases the novelty of non-linearity is not enough to make up for their shallowness, though perhaps that should not be surprising in the light of the problems of authorship and production.

The domain of engineers

Despite the migration of increasing numbers of designers and artists, the computer industry remains the dominant influence in digital media. Its culture is engineering and its aesthetic is mechanistic. Computers have come into existence to promote accuracy and control, not beauty and sensuousness, to standardise processes and functions to increase productivity, and to ‘process’ information more efficiently. These have been the goals of generations of cognitive psychologists who have devoted their careers to what Edwin Tufte has called ‘the essential dilemma of a computer display... that at every screen are two powerful information-processing capabilities, human and computer. Yet all communication between the two must pass through the low-resolution, narrow-band video display terminal, which chokes off fast, precise, and complex communication.’ It is no accident that the grandly titled ‘human interface designers’ have chosen the metaphor of the push button and the cockpit control panel, rendered in trompe l’oeil three dimensions: depress to fire.

The engineer’s pursuit is a ‘clean’, synthetic, hyperreal environment which demands ever greater resolution, ever more processing power and memory – Platonic harmonies are at odds with the intuitive side of human existence. This computerland is made of straight lines and perfect circles; humans interact with it on the computer’s terms, not the other way around: they enter the virtual reality headfirst and leave their bodies behind. This is the dominant aesthetic of industrial society – but is it an intrinsic property of the computer?

To believe this is so would be technological determinism of the kind that insists that interactivity is implicitly empowering. The alternative, humanistic approach to the digital domain would have it enter the real world of everyday objects and spaces as an augmented reality of smart objects and environments. It is a vision shared by a dissident subculture which sees no reason why the computer should be inimitable to art if understanding is valued over technical virtuosity, or incapable of accommodating the spontaneity, intuition, indeterminacy, plurality, and unpredictability of its human host.

Models for new media

The almost magical capacity of the computer to manipulate massive quantities of different kinds of information – logical, physical and textual – and to stimulate multiple senses in four dimensions presents an extraordinary opportunity and challenge to artists and designers. To create a unity of tension and resolution from the sheer volume of available material components is a task more akin to industrial design or architecture than to graphic design. It has become starkly apparent that there are no satisfactory existing models for the new media; these will arise out of the process of construction and as a function of the delivery method – now, almost always, the screen.

To invent new immaterial structures requires artistic imagination and a certain intellectual freedom. Perhaps this is why early examples have been so deficient – as if the question ‘How does it work?’ has preceded ‘What is it for?’ Pioneer software developers have of necessity concentrated on engineering the products, overcoming the complexities of authoring tools and cobbling together the many elements into some form of navigable entity, ignoring the niceties of function, styling and responsiveness. Ironically, the availability of an extremely cheap and capacious distribution medium, the CD-ROM, has exacerbated the problem, encouraging the sacrifice of responsiveness to resolution and – as a read-only memory – discouraging the constructive, or writerly, aspects of interactivity.

In the absence of models for computer-based art and information design other than arcade games, ATM screens, datatype terminals and conventional computer applications, the majority of commercial CD-ROMs that are not straightforward games have transposed the traditional book form to the screen and added the computer’s index and search facilities plus sound and video, which usually act as functionally separate elements. This was hardly innovative, but has had its successes. The ‘talking book’ for children – such as Broderbund’s witty Just Grandma and Me and Arthur’s Teacher Trouble – supplements spoken narrative with rudimentary participation in the form of finding and activating animated or talking objects. Music accompanied by scores and critical textual analysis has been a favourite and obvious application, as have encyclopaedias and dictionaries that take advantage of automated indexing, random access and cross-referencing. Taxonomical products such as Microsoft’s best-selling Encarta, its movie guide Cinemania and the Microsoft Musical Instruments are worthy, crude and unwieldy, useful reference texts which maintain the separation between writer and reader. Voyager, the publisher of ‘expanded’ books, has created a more constructive model – essentially a study device which accepts that interactive learning is hard work, not osmosis. The book ‘engine’ adds a wide range of indexing functions to classical linear texts, together with appendices of subsidiary material such as criticism and author interviews and, most importantly, extraction and note-taking facilities. This model fulfils part of the specification of a true hypertext document in that it enables its reader to build on the written text.

Hypertext presupposes that the reader becomes an active participant in the work. The idea has its roots in contemporary critical theory, in the writing of Jacques Derrida and Roland Barthes and in experiments in narrative structures by novelists from James Joyce to William Burroughs and Robert Coover. Similar theories of non-sequential writing, in which text offers choices to the reader, emerged in computer-industry research into information retrieval – the term hypertext was coined by computer scientist Theodore Nelson in the 1960s. Hypertexts take as their starting point segments of text, often called ‘lexias’, arranged in modular networks rather than as linear forms. These ‘segments’ can also be occupied by sounds or images, hence hypertext becomes interchangeable with multimedia.

Hypertext sits in opposition to the idea of art and literature as complete and iconic. ‘“Text” has lost its canonical certainty,’ says Robert Coover, who has worked in the pioneering Hypertext Fiction Workshop at Brown University. ‘With its webs of linked lexias, its networks of alternative routes (as opposed to print’s unidirectional page turning) hypertext presents a radically divergent technology, interactive and polyvocal, favouring a plurality of discourses over definitive utterance and freeing the reader from domination by the author.’ The author creates a dramatic situation or environment within which the reader acts; there is no closure and no given principles, but instead a set of characterisations or probabilities, perhaps based on simple rules of conduct.

This notion of a ‘narrative’ with no centre, hierarchy, linearity or conclusion but rather a matrix of nodes and links offers a suitable model for many digital art forms, not merely literature. Film, for instance, can be broken down into scenes, each of which becomes a self-contained component or lexia. At the interactive cinema unit at MIT’s Media Lab, film students have created ‘thinkies’ – interactive films in which scenes of the same event shot from the point of view of different participants can be viewed in any order and supplemented by additional elements such as documentary texts. The role of the viewer is to construct his or her interpretation of the events. The possibilities have been extended by Dan O’Sullivan at Apple, who has developed ‘navigable movies’ in which the viewer has control of the ‘camera’ to move through pre-shot global sequences. Hollywood’s attempts at interactive cinema – such as the CD-ROM-based Quantum Gate – have been an instructive failure, taking the traditional form and repackaging it for multimedia by shooting multiple linear sequences with multiple, but distinctly finite, closures, commonly spiced together with shoot-em-up or adventure games.

Games are nonetheless a useful starting point. As well as involving play, they operate according to rules rather than plot and can also involve composition. SuperNintendo Paint is a game as well as a tool with which one composes. SimCity 2000 involves play, but out of it one constructs virtual cities subject to the laws established by its authors. The rules are too simplistic to produce anything but a limited understanding of urban planning and politics, but that does not negate the method.

The description ‘game’ implies frivolity, yet gaming and working, or learning, are hardly mutually exclusive. At ‘Rolywholyover’, the recent exhibition at the Guggenheim Museum SoHo, New York, curated by composer John Cage with Julie Lazar, a computer installation by the designer David Rosenboom used hypertexts to allow ‘players’ to ‘experiment with strategies for composing music reflecting those employed by Cage.’ The system randomly sampled portions of the disk, extracting selections from compositions by Cage, Henry Cowell, Alvin Lucier and others. The user could direct the computer to compose an ‘assemblage-in-time’ by employing chance operations to splice together excerpts from the archive. Similar textual compositions could be created from overlaid quotations that would appear and disappear from the screen in accordance with an algorithm selected by the reader.

Typography in four dimensions

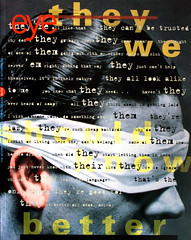

The processes of ‘reintegration’ and collage, producing new associations out of apparently contradictory elements, employing found objects and sampling, chance and randomness are the meat of digital media and familiar enough themes in modern art, design and popular culture. But when there is no fixed order of reading and the reader takes decisions formerly made by the author or designer, our traditional understanding of the machinery of communication and, consequently, of typography, disintegrates.

Robert Coover has already explored some of the implications of the new medium from a writer’s point of view: ‘There are no hierarchies in these topless (and bottomless) networks, as paragraphs, chapters and other conventional text divisions are replaced by evenly empowered and equally ephemeral window-sized blocks of text and graphics – soon to be supplemented with sound, animation and film ...With hypertext, we focus, both as writers and as readers, on structure as much as on prose.’

The mechanics of linear reading have resolved themselves into headings, body copy, paragraphs, sidebars, footnotes, and so on, denoting primary and secondary elements. In hypertexts all text is equally accessible, so that a statement and its cross-reference appear to exist within the same space and the linear hierarchy is replaced by interrelating elements which must be mapped and identified. New graphic languages are required to signal gateway and path, and to denote the nature of the transition between different kinds of texts.

In an environment of constantly changing states, properties of time, velocity, volume and luminosity replace size, weight and style as vehicles of direction, expression and emphasis. Type which moves, grows and shrinks, type which responds to events, sounds and its surroundings, type which can be viewed from the side and back as well as head-on is not the type we know from print. Rather than designing objects, we are designing processes, architectures of information in networks of three-dimensional data which are themselves reconfigurable by and responsive to their users. The design process expands from visual engineering to a choreography of different sensory elements which can be integrated and made to drive each other.

The most advanced research into a new typography for digital information systems has been carried out at the Visible Language Workshop at MIT’s Media Lab, which until her recent death was led by Muriel Cooper. The VLW has concentrated its efforts on the problems of navigation, presentation and transition in information design, and its solutions have drawn on conventions from traditional design disciplines as well as developing a range of new typographic devices. Early attempts to give the two-dimensional screen ‘depth’ resulted in type that blurs and fogs as it recedes into the background, signalling its continued presence while allowing type laid over it to be legible; type that takes on a contrasting shade to its background; type that ‘yellows’ as the information it contains ages; and type that vibrates to indicate that it carries links to other texts. The VLW has also played with sound attached to words so that they announce themselves or to confer character. As Cooper explained to me: ‘In two dimensions, it has been extremely difficult to create complex information structures. When we moved to the third dimension a lot of those problems went away – in exchange for new and interesting ones. One of the things that’s intriguing me is the relationship of the spoken word to the visual representation of character. Where do typography and speech join? What are the important cues and characteristics that typography visualized can give us – as compared to linear, but distinctly advantageous spoken communication?’

The most recent work has concentrated on three-dimensional structures through which the user can travel using an ‘infinite zoom’. Imagine this like navigating a constellation of units of information in a computer game. Texts grow larger as they become nearer, revealing further texts to which they are linked. In one example, the user loops through and around the text cells in three dimensions, with different movements representing different kinds of transition, perhaps from logical to physical or textual datatypes. Though such prosaic problems as how to treat leading and character weight in type which changes size from body text to headline will have to be resolved, the beauty of the infinite zoom is that it is so literal. There are no icons. The text represents itself, and even at a distance it is possible to get a sense of the information it contains. ‘We don’t believe in the power of icons,’ says Cooper. ‘Their scope is limited. How many real honest-to-god symbols are there in the world?’

Cooper’s thinking is a refreshing contrast to the conventional wisdom in the computer interface design community, which worships on the altar of icon and metaphor and appears to believe that the human mind is incapable of abstract thought. ‘I think what you’ve got to find is a way of shifting – as we do actually – from metaphor to paradigm to concept to abstraction. Metaphors can kill if you are compelled to stay within them. The most powerful manipulating ideas are abstract.’

Invention and artistry

The technical and visual sophistication of the VLW’s work has yet to infiltrate commercial products. The tendency to domesticate powerful tools through homely metaphors and to standardise presentation in the Windows format severely limits personal expression – generic software produces generic art. The computer community appears to see design as a last-minute paint job. An abstract from a tutorial at CHI ’94, the annual conference of software-interface designers and cognitive psychologists held in Boston in May, encapsulates this viewpoint: ‘The approach described is based on the communication-oriented design aesthetic seen in graphic design ... This tutorial will provide an introduction to the core competencies or ‘tricks of the trade’ that all students of visual design internalize.’

So a century of modern design theory is reduced to a few easily co-opted trade secrets – which at least explains why there is such slack integration of different media elements in CD-ROM products (‘tight-assed little branching things’, Cooper called them). There is much work to be done even in the most straightforward elements of screen presentation of text. Reading from a screen is a qualitatively and quantitatively different process from reading from a page: the screen shows only a tiny portion of the text, unlike the book or magazine which exposes the full spread, allowing the reader to scan quickly across the copy. In the absence of no more than a handful of fonts designed specifically for the screen – Lucida and Apple’s default screen fonts Chicago and Monaco come most readily to mind – the designer is limited by the rule that at low resolution, bigger is better. Anti-aliasing – the greying of the edges of type to decrease their jaggedness – is of value only at sizes above 20 point, yet to obtain that ‘classical’ book feel, products such as Microsoft Musical Instruments persist in using 12 point serifs which remain unreadable even though they are individually anti-aliased by hand. Even at the superficial level on which the computer industry understands it, graphic design has a great deal to contribute to digital media.

But the communication breakdown works both ways. The few self-conscious attempts by graphic designers to confront the new medium have been notable failures. One of the earliest, 8vo’s Octavo no. 8, distributed on CD-ROM, was a slow, unresponsive, fundamentally linear product. The spoken text was prescient and there were interesting typographic ideas which echoed some of the work of the VLW, but these were used as stylistic elements rather than functioning components: once type had fogged it could only be accessed by repeating an entire information loop. The recent Nofrontiere Interactiveland, also on CD-ROM and produced by Andrea Steinfl and Alexander Szadeczky, falls into an identical trap. Although formally beautiful and stylistically sophisticated, it fails in the basics of interface design by offering no feedback to its user and, despite its pretensions to ‘interactivity’, it offers performance rather than participation. The sloganeering content is made all the more banal by the way the animation of type ‘headlines’ every sentence. This medium imposes strict disciplines on the writer.

The interactive, cinematic and aural elements of digital design are foreign ground to most graphic designers, despite a long and fertile tradition in film and television title production. An understanding of process, pace and development should be basic skills, especially in such kinetic disciplines as magazine design. But while visual dynamics are stock in trade and navigation is a familiar problem – in two dimensions – responsiveness and efficiency are not. There is something peculiarly idiosyncratic about the operation of text with images in digital media which requires great delicacy of touch, creating at its best a kind of visual poetry in which the writer must be intimately involved. In respect of its responsiveness to the user, testing and retesting at prototype stage are essential – a process closer to industrial design than to graphic design.

So whether graphic designers are the natural heirs to the design direction of this medium is open to question, as must be the future role of film and television directors or interface designers. Those applications which involve complex engineering and which are measured by their performance will be beyond the ingenuity of those without product design skills, and in the pioneering phase there will be a premium on invention as well as artistry. Clement Mok, a graphic designer who now develops expert systems for the medical profession, likens the role of the multimedia developer to that of a master architect in its combination of the skills of negotiator, organiser, problem-solver, artist and inventor. ‘You are trying to conjure a myriad variables into some cohesive object. It also has the attributes of product design: you are creating consumable bodies, objects, not surfaces.’ The relationship of the multimedia designer and software engineer mimics that of the architect and construction engineer in that it is one of negotiation, unlike the relationship between designer and printer, which has largely become one of instruction.

Colonising multimedialand

It is ironic that just when graphic designers are liberated from their dependence on typesetters and photographic technicians they should fall foul of the programmer. If designers are going to extend this medium, they will have to have to learn to ‘script’ and program. Intention will translate more easily into action as the operation of authoring tools becomes more transparent, though it is difficult to envisage a time when the complexity of animating and integrating multiple texts, sounds, and still and moving images will permit intuitive construction. Authoring tools such as MacroMedia Director and Premiere require relatively simple programming skills and there are already software engines – such as the Voyager Toolbook for creating expanding books and the narrative hypertext system Storyspace – which enable the relatively naïve to integrate purely textual data. As graduates with the appropriate technical skills filter out of the few multidisciplinary interactive design courses – in the Media Lab and NYU’s Tisch School of the Arts Interactive Telecommunications Program in the US and the Royal College of Art’s computer-related design department and St Martin’s College of Art’s post-graduate computer course in London – we may begin to see a more intelligent sense of structure, synthesis and subject. We may also see the traditional design disciplines merge and reconstitute themselves anew.

Groups of designers are already infiltrating the Hollywood / Silicon Valley axis that lies at the centre of California’s multimedialand – in research companies such as Interval and in small production companies such as Pat Roberts’ Convivial Design and Arborescence, run by exhibition and graphic designers Chris Kruger and Amy Pertschuck. But some of the best examples of imaginatively designed digital artworks have emerged from less obvious sources. Seth Lambert, a 23-year-old Bostonian with no academic design training but six years’ experience in digital design, includes among a prolific output the rapid-firing, monochromatic essay in text and video for kgb america, a recently launched New York lifestyle magazine. Like Keith Seward and Eric Swenson of Necro Enema Amalgamated, producers of Blam!, Lambert values responsiveness over resolution, demonstrating the vigour of the medium and providing a welcome East Coast reaction against laid-back high tech gloss.

Digital media still has enormous conceptual difficulties to overcome before it can add a contribution to understanding and inspiration to its established role in entertainment. The false distinction between multimedia, game and application must be eroded. There are fundamental weaknesses in the immersive HyperCard prototype of interactivity, which jolts the user between passivity and activity, swinging between intensely concentrated audio-visual activity and silent perusal of text. Structural models for navigation are primitive, creating a perception of vertigo and dislocation. (Coover, accurately, describes the structuring of hypertext as ‘so compelling and confusing as to utterly absorb and neutralize the narrator and to exhaust the reader’). The delivery mechanism is immature – until CD-ROM is superseded by online communication, the notion of hypertexts as individual components of vast interconnected libraries of information will be unfulfilled (on a narrow bandwidth, the World Wide Web on the Internet has already initiated this process). Digital media is indeed something far more fluid and ambiguous than the ‘content-based’ CD-ROMs with which we are becoming familiar. When it is delivered online, it will contain the element of flux and growth which could become a fundamental component of post-industrial art and design.

William Owen, graphic designer, design writer, London

First published in Eye no. 14 vol. 4 1994

Eye is the world’s most beautiful and collectable graphic design journal, published quarterly for professional designers, students and anyone interested in critical, informed writing about graphic design and visual culture. It is available from all good design bookshops and online at the Eye shop, where you can buy subscriptions and single issues.