Autumn 2023

Artificial idiot

Unpredictable, cliché’d, wonderful, neither artificial nor intelligent – is AI the dumbest new kid on the block or the future of illustration? Marian Bantjes explores the weird world of text-to-image generators

Yellow Seal, generated early on when author Marian Bantjes started using Midjourney, 2023. ‘Many of the creatures seem incredibly enigmatic to me. This little guy has always been one of my favourites, with his crazy 1970s hair and weird snout.’ All captions by Bantjes.

I have been working (or playing) with the text-to-image generator Midjourney since January 2023. First because type designer Jonathan Hoefler invited me on to the platform, second because I wanted to write about it – and the controversy surrounding the use of AI – but it felt wise to use it first to better understand what it does.

AI can be compared to slot-machine gambling. It is highly addictive and entertaining, but what you get out is images rather than money. Like a slot machine, it can take many pulls before you win, but sometimes you win on the first try. Like gambling, you can get a run of luck where most of the images are three bars of gold; and bad runs where nothing lines up, and after an hour or so you give up and walk away.

It is highly unpredictable, and it’s that unpredictability that interests me most. But it has also brought a multitude of troubling questions regarding authorship, imagination, and what makes art ‘good’ as opposed to ‘bad’ (a subject that I’ve been fascinated by my entire adult life, and which I’ve actively played with in my work).

This is not the way I thought automation would go – direct to the ‘creation’ of work, rather than hovering around the refinement of work – and at first the idea of handing over the most fun part of making images to a machine was both absurd and abhorrent to me. But the more I used Midjourney, far from taking the place of my own imagination, it seemed to feed it. I could barely sleep with all the ‘what ifs’ racing through my brain. And while I have since settled on a (for now) definitive use for the images I’ve generated, and found a way to make them my own, I have a plethora of generated images that stand on their own as Art, although I feel little artistic ownership over them, and their ‘artiness’ troubles me. They are. They aren’t. They are. They aren’t.

Meanwhile, a storm has been raging, mostly in the illustration world. The amount of panic about the implications and origins of AI-generated art, including a well known class action lawsuit against Stable Diffusion (see stablediffusionlitigation.com) seems to be spreading. Some of those who oppose not only the development but the use of AI have grown increasingly vitriolic in their responses to me and others, as though we are traitors to the profession, siding with the machine that will mow them all down.

I am someone whose former job, as a typesetter, was eradicated by the advancement of technology. I am also someone whose so called ‘style’ was inexpertly copied by hundreds of people to the point that, short of putting me out of business, it made working more difficult and less lucrative than when I started. My attitude toward this is c’est la vie.

Ever since the industrial revolution, and perhaps even more since the digital revolution, machines and technology have copied, emulated and stolen from craftspeople, causing millions of people to learn new skills, or new ways to use the new technologies or become extreme niche experts in their original field.

Some advancements, such as the invention of phototypesetting in the mid-1950s, eventually caused entire industries to collapse and with them untold amounts of antiquated equipment was discarded. In the 1990s you couldn’t give metal type away, so it was melted down for scrap. Today it’s a speciality item, hard to find and increasingly expensive.

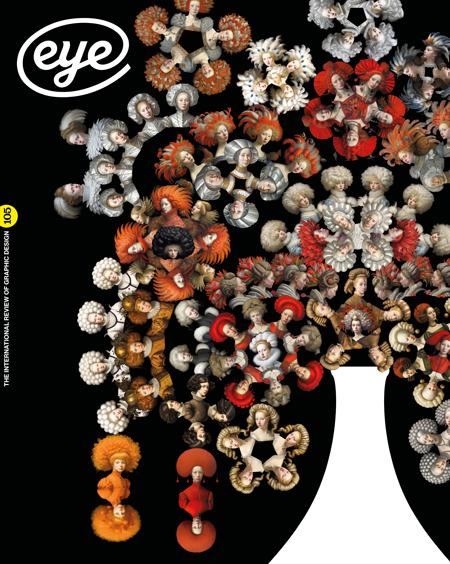

‘Midjourney is more than capable of making its own fascinating scenes. The two images came from the same prompt, and I’m not sure which I like more. The first has an exuberance of feathers and hair that appeals to me, but the early American painting style in the second, combined with incredible composition, creates a perfection of form.’

Type designers decried the invention and ad hoc use of Fontographer (1986) by all and sundry in the 1990s, and the explosion of type styles that emerged from the uninitiated and untrained, churning out ‘shitty’ fonts at an astonishing rate. With the help of that photographic abomination, Photoshop (1988), an indelible new era of graphic design evolved, much to the disgust and chagrin of proponents of the International Style and Beatrice Warde’s ‘Crystal Goblet’. Everything was ruined and the world was never the same again – or was it?

Now it’s 2023, more than two years since the reveal of the text-to-image platform DALL-E, and more than a year since Midjourney entered ‘open beta’. It seems as if a new AI image generator enters the market every day, and we are drowning in AI images. Put away your knitting needles and hide your patterns, the knitting machine has arrived and it’s here to steal everything you’ve ever made.

Or so it would seem from the hyperbolic venting of everyone from The Guardian to the Society of Illustrators (New York). ‘There is no ethical way to use the major AI image generators. All of them are trained on stolen images, and all of them are built for the purpose of deskilling, disempowering and replacing real, human artists. They have zero place in any newsroom or editorial operation, and they should be shunned.’ This quotation by an artist named Molly Crabapple from the LA Times has been making the rounds, and her open letter to ‘Restrict AI from publishing’ is one of many such letters and petitions popping up to battle the great evil of AI from taking our jobs and creating ‘art’ all by itself.

‘Midjourney’s ability to create astonishing compositions is what surprises me most. This gold-and-white fish, flying over a red silk scarf, is one of my favourites. It is, to me, a gorgeous piece of Art. The fish in its red fishnet stocking, its gold picked up in the silk, and the pièce de résistance – that odd, folded piece of white linen below its fin. How does it do it?’

Advanced hyperbole

There are a number of fundamental misunderstandings at the root of all this, and the first is about what Artificial Intelligence is, and where it came from.

There is no one AI. There is a quest by computer scientists (mathematicians, programmers along with, presumably, neurologists, sociologists, psychologists and other curious minds) to develop Artificial Intelligence, or, as it is now known, Artificial General Intelligence (AGI), which is intelligence equal to humans. Everything that we have seen in our lifetimes that we call AI, or that uses AI, is an exploratory step along the way to AGI, but none of it is definitively AI. The source of AI research is the same as computing – it has come from both academic research labs worldwide and, increasingly, corporate labs. Along the way, individuals and corporations have spun off products or introduced AI models into existing products, sold for profit.

There is big money in AI. Not in ‘putting artists out of business’ in order to reap the profits of our vast incomes, but in thousands of potential (mostly over-hyped) applications seeking venture capital investment.

‘Four images from the series I named ‘WTF-’, because a mistake in my prompt made everything go nuts. The first set of images were bizarre enough for me to regenerate them, and an ever-morphing series of madness ensued. I made 78 iterations – all the same, but different – all incredibly weird.’

Author and digital activist Cory Doctorow writes: ‘If the problem with “AI” (neither “artificial,” nor “intelligent”) is that it is about to become self-aware and convert the entire solar system to paperclips [a reference to Frank Lantz’s Universal Paperclips game], then we need a moonshot to save our species from these garish harms. If, on the other hand, the problem is that AI systems just suck and shouldn’t be trusted to fly drones, or drive cars, or decide who gets bail, or identify online hate-speech, or determine your creditworthiness or insurability, then all those AI companies are out of business … For those sky-high valuations to remain intact until the investors can cash out, [they want us] to think about AI as a powerful, transformative technology, not as a better autocomplete.’

Doctorow’s point is that the hype of the supposedly vast power of AI helps to fund projects using it, whether realistic or not, for the sake of profit. Huge amounts of venture capital are being spent on AI startups, often based on a lie of how powerful it is.

It is complicated and technical, but what I can tell you is that despite huge advances in certain areas (mostly due to the availability of more data and faster processing), humans are nowhere near reaching AGI, and the path they are currently on is not the path to AGI. It’s interesting, even astonishing, but it is not intelligent and isn’t getting more intelligent. No one knows what the next step is to send it in the direction of General Intelligence, but it is not more data or more power.

Both language learning and text-to-image are generative models: they take enormous amounts of data and use it to generate plausible results from the data it has observed – or, if you will, ‘learned’. I will narrow this down to text-to-image models because these are the ones that everyone in illustration and design is freaking out about. We’re talking about Stable Diffusion, DALL-E, Midjourney and others. They do not cut and paste, they do not collage – as erroneously described by the aforementioned class-action lawsuit in progress – and they do not regurgitate.

‘Experimenting with a house full of rabbits, I was delighted to discover that Midjourney made no distinction between rabbits as animals, as images on walls or on fabric and indeed often fused the two together, with rabbits being both on and in the furniture at the same time.’

By looking at millions of pictures of things that have been labelled or tagged – for instance, rabbits – they learn what arrangements of pixels equals rabbit and then ‘predict’ what is the most likely outcome in pixel arrangements to equal rabbit. This is called a prediction model. This is perhaps their greatest similarity to human intelligence: the ability to identify and recreate. What they don’t understand is that ‘rabbit’ is a living thing separate from, but similar to, other living or inanimate things, that it has internal organs, is sentient, or any of the symbolism or associations with humans that we all either inherently or explicitly understand – things that children easily understand not only by being told, but by understanding rabbits in the context of animals, the properties they share, and our emotional connections to them.

So these models can create an arrangement of pixels that they predict will be closest to the request (text prompt) made by a human, based on their mathematical understanding of pixel arrangements (images) on which they have been trained. Every image is created anew, every image is unique. This is, to me, the most astonishing thing about these models – the mind-blowing variation in images generated not only from the same text prompt, but in subsequent versions generated from the generated images. I have experimented with this, generating the same image over and over and each generation is both the same and very different.

In one example, the shape of a shark morphed, over 78 generations, into an alligator, a hippo and back, while all the objects around it underwent similar transformations, including turning into boxes and piles of goo, while maintaining a generality of the same image. For someone interested in patterns and, more specifically, non-identical patterns, this has become a useful tool, as well as better-than-TV entertainment.

If these image generators excel at one thing, it is Surrealism. I would be tempted to call Midjourney a creative genius, except that there’s no creativity about it. Just numbers and pixels, predictions and a very, very wrong idea about how the world works. In a recent run of producing images of rabbits on furniture, Midjourney had no concept of the dimensional reality of rabbits. It merged them with furniture, walls, sometimes with their bodies half in and half out of inanimate objects.

‘All I said was “Lemon Camel”! When I first started playing with Midjourney in January 2023, one of the first things I burrowed into was making strange creatures. This was, on the one hand, incredibly easy to do, and on the other, impossible to control and full of amazing surprises.’

Thievery corporations

It is true that image generators are trained on existing images from the internet, but they absorb everything, many millions of images, looking for tags and captions and eventually, all the familiar objects it has already learned. Every time you tag or identify an image on the internet, you are helping AI; every time you fill out a ‘captcha’ identifying streetlights, bicycles, crosswalks, you are helping AI.

Meanwhile, of the people I know or know of who have been vociferous in their complaints, all of them post their work on Instagram. Every time you upload to Instagram, Facebook, Pinterest, TikTok or whatever, you are helping AI – and you are giving up your rights to do anything about it. This is part of Meta’s user agreement: ‘Specifically, when you share, post, or upload content that is covered by intellectual property rights on or in connection with our Products, you grant us a non-exclusive, transferable, sub-licensable, royalty-free, and worldwide license to host, use, distribute, modify, run, copy, publicly perform or display, translate, and create derivative works of your content (consistent with your privacy and application settings).’ (Meta owns Facebook and Instagram.) There are more than 50 billion pictures and videos on Instagram, with 1.3 billion images being added daily. Facebook has more than 250 billion photos, and users are uploading 2.1 billion new photos each day. There are 750 billion images on the internet, including 136 billion images on Google Images.

It is worth reading a ten-part article by Hayleigh Bosher, an Intellectual Property Law academic at London’s Brunel University, published in The Conversation website in 2018. Among many startling things, Bosher points out that ‘Instagram can give away or sell your content’ and that ‘You could be sued for copyright infringement’, as Khloé Kardashian found out in 2017 (though that particular legal action was dropped).

‘This creature emerged from the prompt “banana walrus blue fronds”. Can I ever get him back? Nope.’

So when artists think they are being ‘targeted’ I have to wonder if this response is rooted more in one’s sense of self-importance or ubiquity of style. For those who have claimed copyright infringement I have been unable to either replicate their work with a text prompt, or, seeing comparison images, been unable to tell that artist’s work from hundreds of others who work in a similar medium or style. It’s a numbers game, at times tweaked by human programmers. If they’re targeting anyone it’s Van Gogh and Vermeer. Oh, and that AI-generated version of Vermeer’s Girl with a Pearl Earring that was turning up in top Google hits for the artist? It was hung in place of the original in the Mauritshuis Museum, when said original was out on loan to the Rijksmuseum. From February to June 2023, the exhibit ‘My Girl with a Pearl’ displayed fans’ recreations of the seventeenth-century masterpiece, including versions featuring self-portraits, miniature art and a glamorous dinosaur.

So it got a lot of press and reproduction because it was created with AI (as opposed to, say, the ‘Girl with a Pearl’ elephant painting), which raised it high in Google’s algorithm. Meanwhile, my top hit for the painting returns an image from the movie, featuring Scarlett Johansson. I fail to see the difference in implication.

But what does it mean to copy, or in fact, ‘steal’ an image? When we search for an artist and look at their work, are we stealing? What about if we collect their work on a platform like Pinterest? What if we use collections for so-called ‘inspiration’, or reference? The vast majority of us look at, collect, share and even use images (and films and music) made by other people all the time, using them as desktop backgrounds, putting them on our phones, using them in presentations, inter-office memos and online memes. Is any of this stealing? In some cases yes, in some no, but the practice is rampant, and the most important thing is not the method used to capture images, but the education around what is considered culturally acceptable – China has a long history of copying, and the Chinese don’t see our obsession with ‘the original’ in the way we do in the West – and more importantly, legal.

Copyright laws vary between countries but in the United States, where much of the hysteria is happening, you cannot copyright style. Unless someone specifically lifts your image and reproduces it, there is no case. The margins for ‘transformational work’ are somewhat indeterminate.

I have had my work outright lifted, and sent out my lawyer; and I’ve had it emulated, about which I can do nothing, and – many would say – nor should I be able to. However, there are far easier ways to ‘steal’ other people’s work than attempting to generate it through AI, which knows almost nobody in illustration and design. With Stefan Sagmeister’s permission I tried to emulate his famous Lou Reed image (the poster for Set the Twilight Reeling, 1996) and the results were abysmal – Midjourney knows who Reed is, but not Sagmeister, and my attempts to increasingly, specifically art direct it yielded results far worse than if I had just picked up a pen and scrawled some writing across a face.

As roboticist Rodney Brooks, former head of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), writes on rodneybrooks.com: ‘Many successful applications of AI have a person somewhere in the loop. Sometimes it is a person behind the scenes that the people using the system do not see, but often it is the user of the system, who provides the glue between the AI system and the real world.’

For every single claim that ‘AI is doing this’ or ‘AI is doing that’ it’s important to remember that AI is a non-sentient algorithm that is doing exactly nothing until a human asks it to do something. The battle against AI on a copyright basis is a battle against all the humans who either do not understand or blatantly disregard intellectual property rights. If you are truly concerned about people stealing your images, you should look, not to AI, but to students and unformed image-makers who have either a lack of knowledge, imagination or skill to create work of their own (students’ portfolios are notorious for featuring work they didn’t author). You should also look to Etsy and every print-on-demand site on the internet– if those ‘David Hockney’ pillows on Redbubble (featuring his actual work) are licensed, I will eat one of them.

I recently saw an anti-AI presentation by a designer in which he used, as a depiction of an elderly man, an image of the character Carl Fredricksen from the Pixar movie Up. He should have used AI instead of infringing copyright in a more egregious manner than he was accusing Stable Diffusion of doing.

For those designers, illustrators and artists who use their brains to think laterally in coming up with concepts, who draw on their knowledge of history and contemporary culture, who use wit or humour in their work, who rely on structure, sense and order, text-to-image generators are of no threat to you. None of those things can be done by AI: it cannot think, research, plan, strategise; it has no knowledge of context, of your client, your place, or your culture; it makes no references, it cannot allude to ideas, know what is in or out, or play visual games.

In short, it cannot design, and it cannot make intelligent illustrations. It can only make predictive images based on what humans put into it – and as I mentioned – it’s extraordinarily difficult to control. There are hundreds of Midjourney text prompts published on the internet to help people get exactly what they want, and yet even those who create long and complicated prompts struggle with Midjourney’s misunderstanding and seeming wilfulness.

‘This AI-generated image has both fantastic composition and some kind of story, as the floating angel- like bat-rat thing in lace vestments confronts a smaller but more malicious bat-thing in a darkened room. Some kind of debris and possible insect lies below them.’

I talked to Pum Lefebure about the creation of Design Army’s ‘Adventures in A-Eye’ ad campaign for Georgetown Optician. Contrary to popular belief that you can generate an image in seconds (you can, but it’s doubtful it will be the image you want), Lefebure described her struggles getting the kinds of images from Midjourney she could use. ‘I think that older people with more experience in directing photoshoots, lighting, types of cameras and film; as well as a knowledge of art and design history, have an advantage in directing AI,’ she says. Her prompts are long and complex. Nonetheless she says she spent more than a week – 40 to 50 hours – generating images to get the collection she was happy with. Because it is so uncontrollable, they decided to use the colour pink as a unifying theme to bring disparate images together. After she had generated, collected and curated the images, she says they were about 50 to 80 per cent done, depending on the image. Then they had to be colour corrected, retouched and have the glasses products Photoshopped in.

Lefebure told me that a ‘relationship’ seemed to develop between herself and the machine. I have also had this sense, when I spend a lot of time with it, that it seems to get to know me and deliver more of what I like. I had put this down to my human perception, but Lefebure says that when she gave the exact same prompts to other members of her team, they didn’t get similar results. What worked for her wasn’t working for them. We also talked about the ‘on’ and ‘off’ days. We’ve both experienced that kind of ‘gamblers’ luck’, when we just get image after image that is fascinating and wonderful. In contrast are the days when it doesn’t seem in tune with us. ‘Sometimes it would just go so wrong,’ she says, ‘I would just keep pushing and pushing until it went right, and other times … well, it was just a bad day. Try again tomorrow.’

‘Image returned from the prompt “iron apple”. Ornament of a particular sort (bad) will show up at any moment – sometimes creeping in at the stage between the thumbnail and the ‘upscaled’ version. It comes from Midjourney’s penchant for fantasy art, which in turn is because it has devoured the entirety of DeviantArt.com.’

It may be my imagination, but I suspect that Midjourney, for one, has been getting less ‘imaginative’ over even the few months I have been using it. It has learned hands, more or less (undoubtedly by human programming intervention) and hooves, seemingly being less likely to make indeterminate blobby shapes or to separate them from the bodies they belong to. Previously when I asked for disparate things, it would fuse them together, making incredibly weird and different combinations, ever-changing and reassembling. Now, the same prompt is more likely to just give me the two separate things in the same image. Lefebure and I talked about this, too – she had the same sense. We both began using it in January 2023, in version 4 (it is now at version 5.2), and she describes it as being,

‘Like a two-year-old, so full of curiosity, with sparkling bright eyes, making all sorts of senseless combinations and mistakes – like the fingers! I loved the hands with seven fingers!’

I agree.

‘But now,’ she says, ‘It’s more like a teenager, who is self-conscious and conforming.’

It’s growing up!

‘Yes! I want the two-year-old back!’

‘Three images from the same prompt that show Midjourney’s typical inconstancy. The girl in the image has roughly the same face; on her head is something that is either made of flowers or is a big flower or something that looks like a wig of flowers; the style of dress is never exactly the same and the bits of red in the sleeve vary. She holds two or three white rabbits, and is surrounded by more rabbits – possibly a wallpaper. That AI can create these images over and over, ad infinitum, simply blows my mind.’

Good art, bad art, real art?

One of the things that has not changed is Midjourney’s penchant for kitsch, particularly from the ‘fantasy’ genre of internet art: wistful forests, elves, princesses, warriors, swords and sorcery, elaborate ornament, and highly romanticised images of women are pervasive in fantasy world depictions. Certain words have to be avoided in prompts. Oddly, one of those words is ‘iron’: use it and you will not get strong, bulky, rusty or heavy iron, but twisted ornamentations, often in what looks more like brass. And don’t even think of using the word ‘beautiful’. Midjourney also loves including people, particularly its idea of beautiful white women (which is more varied than you might think).

These are things that I struggled against until I had the idea to play into it. Among other things, I generated a couple of hundred warriors in gold, that came out satisfyingly over-muscled, over-ornamented, in a fantasy style that included some of the most ridiculous excuses for swords I can imagine. These I collected together in a single image, which I printed at 44 × 52 inches, creating a spectacular result filled with insane details.

For some people this process of mine might satisfy their objection to what they perceive as a lack of ‘authorship’, or the human hand and intent in AI-generated images. When they imagine ‘pushing a button’ and the machine doing all the rest, they take umbrage at the ease of use, the lack of skill, and what they perceive as ‘soullessness’. Welcome to the mid-nineteenth century and the emergence of photography.

Early nineteenth century European painting was a honed skill in the aid of capturing reality as closely as possible, and many artists worked for the rich, creating portraits, paintings of great houses and landscapes. That suddenly someone with no skill whatsoever could look through a box and click a button to produce an exact replica of the image in front of them was considered an abomination and a direct threat to the livelihood of artists. And it was. But artists, instead of going out of business forever became interested in capturing more than what was immediately visible. After the beginning of the Impressionist movement the camera was soon seen as incapable of capturing the soul and able only to imitate the surface of things. James McNeill Whistler wrote in The World journal in 1878: ‘The imitator is a poor kind of creature. If the man who paints only the tree, or flower, or other surface he sees before him were an artist, the king of artists would be the photographer. It is for the artist to do something beyond this.’

‘People have said that they find AI images “soulless” but this creature, which contains no rabbit DNA, with its fur coat and wary look seems positively imbued with thoughts and intent. I call him The Spy and I am certain he is not to be trusted.’

The camera changed the art world completely, and while at first shunned, photographers with imagination and curiosity were able to use it to see in new ways. Today of course, photography is fully accepted as a genre of art.

But what is ‘soul’ anyway? Unless speaking of the belief in a literal ethereal life force that humans – and perhaps some animals – are purported to have, to say that an artistic depiction has ‘soul’ is merely a human projection of feeling onto an image. There are plenty of artists whose work I would consider soulless, while others would disagree. I have certainly generated images from Midjourney that have such expressions that speak to me in an uncanny way. How can an algorithm produce such a compelling character as The Spy [see right]? It is the human viewer, not the machine, that determines this presence of life.

Art made by chance, art made by forces outside of the artist (wind, temperature, etc.), art made by instructions (Sol LeWitt, who in 1967 wrote: ‘The idea becomes the machine that makes the art’), computational art, found or ready-made art, the cataloguing of ethereal events – these have existed for a long time. So has the use of computer programming and automation. This very magazine used the Mosaic program with the HP Indigo digital printer to create 8000 unique covers for Eye 94. Joshua Davis has been using programming to generate an endless array of imagery since the turn of the century, and in 2008 I created the basic patterns from which Karsten Schmidt of PostSpectacular used programming to generate unique borders for Faber’s ‘Faber Finds’ print-on-demand books (see David Crow’s ‘Magic box’ in Eye 70).

‘I generated more than 200 fantasy-style warriors – most of them in one sitting. While the images started out static, the more I generated, the wilder they seemed to get, developing more pointy bits and complex pieces that started to fall off their bodies in a kind of frenzy. And the shoes! Midjourney generated everything from boots to sandals to high heels, all with weird bits and extensions. I’ve started drawing some of them.’

All of these have been accepted – sometimes after some struggle – into the category of art, despite the sometimes absence of the ‘hand of the artist’. I believe that human-made art using AI as a tool will go the same route.

This is not to say that there is nothing to be concerned about regarding the widespread use of generative AI. Bias, stereotypes, and corporate desire to get anything it can for free are real concerns, but they are concerns we’ve faced before, and concerns that exist well outside of the question of AI (corporate greed, for example). These are moral, ethical and political problems that have more to do with humanity than AI.

At this point I like to quote Michael Bierut when the design community was freaking out about the thousands of one- and two-year design programmes churning out half-baked designers, as well as everyone, their secretary, and their cousin’s children performing the duties of designer in the 2000s. He said, ‘Do good work.’

To that I will add: ‘Use your brain.’

Pegasi. ‘Though many Midjourney images are horrible and of no use to me, I’ve discovered ways to trick it into avoiding such tropes, by working with Midjourney’s taste for kitsch instead of struggling against it. I started by generating more than 170 pegasi. Each is in many ways the same, but all are fascinatingly different.’

Marian Bantjes, graphic artist, writer, Bowen Island, Canada

First published in Eye no. 105 vol. 27, 2023

Eye is the world’s most beautiful and collectable graphic design journal, published for professional designers, students and anyone interested in critical, informed writing about graphic design and visual culture. It is available from all good design bookshops and online at the Eye shop, where you can buy subscriptions and single issues.